Wolves in the Walls

A lot of times you'll hear people say, "It's a miracle any game / film gets made," and this project is the embodiment of that.

Wolves in the Walls began as one of the final projects at the venerable Oculus Story Studio, before it was shut down. Reviving the project became the basis for founding Fable Studio. I came on as a fresh Fable employee, ready to stand on the shoulders of the talented work that had gone into pre-production.

The team was an amazing talented mix of folks from CG animation, video games, visual effects. I will never forget the sense of comraderie we all had, plugging away at this project in our little loft office in the San Francisco Mission heat.

We collaborated with the brilliant Third Rail Projects to define the choreography of the experience. Practical things like making sure the user's headset cable doesn't wrap around their legs (remember when headsets had cables?), to taking lessons from immersive theater and making sure an object hand-off is performed just right to maximize the audience grabbing the object.

Some specific highlights would be achieving seamless level transitions with no loading screens, a significant feat for VR at the time. You could play each half of the experience as a fluid piece of immersive theater. Oh, and the entire piece was rendered in this beautiful water color post process, which our CG supervisor Justin Schubert and our friends at Disbelief spent a significant amount of time optimizing to run at 90 frames per second. We did a lot of things that people said VR wasn't supposed to be able to do! The span of 11 milliseconds took on a very special meaning to me by the end of this project.

After a lot of hard work from a lot of talented people, the project was exhibited at Venice and Tribeca film festivals, and in 2019 we won a primetime Emmy for innovation in interactive media!

RIP — J White, best audio designer I've ever worked with. Wonderfu human. Much love ❤️

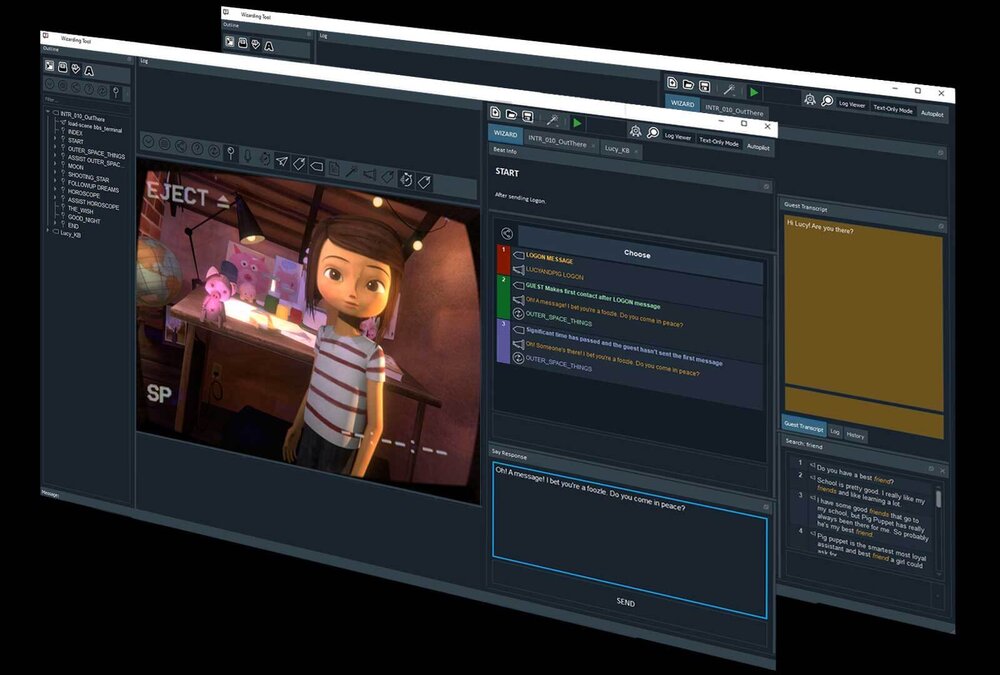

Virtual Beings - Wizard Engine

After creating such a rich character for Wolves in the Walls, we wanted to see how we could push Lucy to be even more responsive and realized than what we'd created for virtual reality. So we went to work studying the state of the art in dialogue systems, procedural animation, and worked with some really smart folks to experiment with recent machine learning results such as the GPT model.

This resulted in an amazing tour of technologies like Resemble.ai, SpiritAI, SpeechGraphics, DungeonAI, Replika, and many many more. The team then embarked on creating a suite of tools for driving Lucy during interactive chat sessions with her, for example a Google Hangout, or playing Minecraft with her over Twitch. Essentially creating a test bench from which new technologies could be plugged in and out to explore automating different parts of the character.

This is marketed as the Wizard engine, because for many of our demos there is still a human in the loop, or "wizard," but as progress is made, less and less intervention is needed.

Star Wars: Droid Repair Bay

Droid Repair Bay was an official companion piece to Star Wars: The Last Jedi. It was chosen based on the success of the Meet BB-8 demo, and because droids are especially loved internationally! They would make for a good marketing piece that could be installed all over the world.

This experience was released on Steam for the Vive, the Oculus Go, and a 360 video was created as well! And it launched world wide for various marketing installations across China, Europe, and North America.

We teamed up with Schell Games on this project, and it was great working with them and learning about managing an external developer.

Meet BB-8

Meet BB8 is a demo made exclusively for the Star Wars Celebration convention, displayed at the Starlight Foundation booth to help kick off the announcement that Starlight and Lucasfilm are teaming up for Star Wars Force For Change.

The demo allows the guest to "meet" BB-8 in an authentic Star Wars environment, where their beloved droid comes into the room and hangs out with you for a few minutes.

I designed and implemented this demo, and collaborated with a small team of developers to polish the demo for general public use. I used procedural animation to create a foundation of locomotion and lookat capabilities with which to build more complex behaviors. I then designed intuitive inputs based on the guest's body language to drive an AI behavior tree, resulting in a memorable experience that was a highlight of the show floor.

Millenium Falcon Ride

This yet to be named ride for Star Wars land involves riding on the Millenium Falcon! Holy cow!

I made some small contributions during the preproduction phase of this project, consulting and creating prototypes. It was such a pleasure to meet real-life Imagineers and feel like I helped make magic happen at Disneyland, however small.

Sources of Inspiration for Accessibility in Virtual Reality

One of the great privileges of my career was to attend a Star Wars Celebration convention as an employee of Lucasfilm and share Trials on Tatooine with the fans directly on the show floor of the convention.

Over the course of the convention we served thousands of guests at our booth. This was effectively the largest single public playtest we had performed, including individuals with conditions that exposed usability issues in Trials on Tatooine that created barriers for some guests.

This experience affected me profoundly, and sparked an interest in researching what other industries and disciplines have done to address accessibility in physical and virtual spaces. The result is a GDC lecture Sources of Inspiration for Approaching Accessibility in VR, written with my colleague Hannah Gillis, along with an online survey conducted in collaboration with Alice Wong. The lecture focuses on taking inspiration from architecture, theme parks, and film set design.

Trials on Tatooine

Trials on Tatooine is a "virtual reality cinematic experiment" set in the Star Wars universe for the HTC Vive. This project was an incredible experience to work on. It was the first time any of us had attempted to tell a coherent story in a virtual reality context.

My personal highlight has to be the work designing and implementing the feel of the iconic Star Wars light saber. Prototyping with a Oculus DK2 and Razer Hydras before we could get hands-on with some of the first developer kits of the Vive, working with a rendering engineer to get the blur trail of the blade just right, collaborating with an audio designer to create a procedural sound system, figuring out how to make deflecting incoming laser blaster fire feel fun... but also cinematic and authentic to the movies... and can you make it feel like you're rewarded for using the force? It was a truly multi-disciplinary effort and my definition of dream-job material.

Trials on Tatooine required a great deal of traditional gameplay programming work, helping out with breaking down a linear movie-style script into a gameplay flow chart, identifying branches and additional dialog needs. It was a really small team so there was lots of opportunities.

Oh and we also crammed the Millenium Falcon model from ILM in there somehow. Might be a vert or two missing from the movie version, don't tell anyone!

Transformers: The Last Knight VR

This Transformers VR project was fun because we were allowed to just be as bombastic and rediculous as we wanted. Explosions, slow motion, robots, fast cars, guns blazing at all times.

My favorite system I worked on for this project was a collaboration with level designers to choreograph visually in the level editor the desired path for chasing enemies to take, and be able to define what state of transformation (ie car or biped) they should be in at a given moment. The AI would figure it out from there!

Broken Age: Gameplay

Broken Age is a classic adventure game with a modern approach. As a gameplay programmer I was responsible for taking the artwork, animation, writing, and puzzles our talented team generated and integrating it all into a cohesive gameplay experience; To implement the vision behind the artwork, and create an interactive world for players to take part in.

This meant coding new features into our engine, prototyping game mechanics, scripting levels, authoring dialog and cutscene assets, and writing tools for artists.

Being on a small team meant constant communcation with teammates was crucial, and afforded me many opportunities to have an impact on many different areas of the game, from taking part in puzzle brainstorming sessions, to discussing sequence blocking with animators, to discussing workflow challenges artists face.

Broken Age: User Interface

I was the principal programmer for Broken Age's title and in-game menus, writing a lightweight 2D UI framework in our MOAI-based engine. I worked closely with art directory Lee Petty to integrate the artwork created by designer Cory Schmitz, and polished the final interface with procedural animations and sound.

Broken Age's UI has a light and playful feel to complement it's bright and saturated color palette. Dynamic objects in the UI have an implied weight. Everything is animated to slide, spring, and bounce into place according to a certain heft that just feels right.

This UI is incredibly flexible. It is displayed on computer monitors, tablets, and phones using the same artwork, using the same codepath. It is localized in English, Italian, French, German, Spanish, and the active language can be changed at any time.

Mnemonic

Mnemonic was a prototype made for Amnesia Fortnight 2014. I really loved working on this project! The art is beautiful and moody, and the gameplay is quite unique.

You play as a young psychiatrist in a late 1940's San Francisco, set in an alternate universe where the atomic bomb was never dropped. The gameplay takes place in a series of incomplete memories, which must be reconstructed. The clues are made via loose connections using "dream logic." An abstract shadow reminds you of an object in another dream. A lantern becomes the headlight of a car.

One thing I love about AF is that you get to explore roles you have not had the chance to try before! So in addition to gameplay programming, I got to contribute a lot to the puzzle design and story on Mnemonic. I am especially proud of the "skyline key" puzzle.

The Cave

My first project as a freshly minted programmer at Double Fine! I learned so much working with an extremely talented team to bring this unique adventure game to life.

As a gameplay programmer on the Cave I worked on the opening and closing levels, and the Twins' and Knight's character-specific levels.

Haptic Snow

This a project I worked on for the summer of 2008 in the Shared Reality Lab at McGill. I am working on a graphical simulation of different ground materials to augment a tile system built for simulating the haptic perception of stepping in different materials. I worked mostly with Alvin Law under the supervision of Professors Jeremy Cooperstock and Paul Kry.

So for example, say the tiles were running in "snow mode," then my simulation would render a snow landscape on top of the tiles. Using motion capture, I will track the user's feet and deform the ground material based on how much pressure they exert on the ground, effectively creating footprints in the snow.

A heightfield is dynamically deformed based on the forces exerted on the tiles. The deformation is a fast and efficient GPU operation, yielding a realtime simulation for our environment. A paper describing the system can be found here.

See this recent video of the system; it reflects further work done on the floor system, meriting the system's acceptance into SIGGRAPH 2009 as an installation.

orthOg

Another student project, going through the NeHe tutorials teaching myself immediate mode OpenGL, written in Java using the Light Weight Java Game Library. It's an investigation of music and rhythm and how these aspects can be linked to effective gameplay.

This project went unfinished, but it was very fruitful in terms of learning the basics of OpenGL, and implementing a beat detection algorithm.

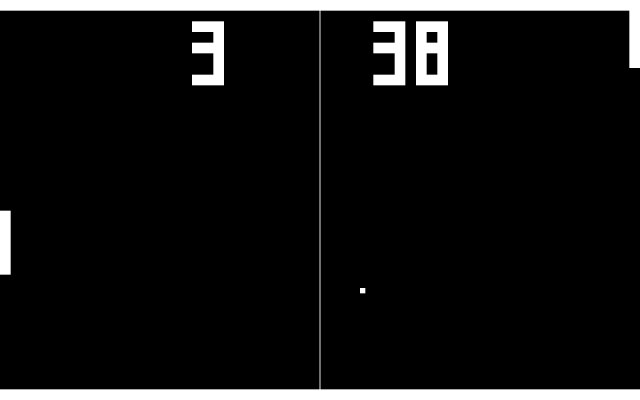

Java Pong Clock

This was a fun student project, the concept is from a piece of artwork I saw. I wrote it in Java using some weird built in 2D graphics library. Two AI driven opponents play pong, and the score of the game reflects the current time.